The justification for created platform

Currently the data analysis systems are widespread:

- Corporations pays much more attention to the company activities analysis in order to optimize business, reduce costs and consequently increase profits.

- Data storage costs reduction leads to the fact that data is not deleted, but is stored in the archives. This trend allows to analyze more and more amounts of stored data.

- The cost of computing resources, including provided through cloud services is reduced. With that there is no need to purchase, maintain and upgrade your computer systems. This trend facilitates the market entry of data analysis and processing systems.

System requirements

- The ability to scale the arbitrarily large amounts of data without the need of algorithms changing.

- During system run time the operation of applications which data is analyzed shouldn’t be suspended.

- Qualitative visualization of obtained results.

- The ability of analysis results storage allows you to organize a quick search on these results.

- The ability to deploy the system in cloud environment of Amazon Web Services.

Project summary

Stackexchange.com websites is a growing network of communities which exchange information in various fields. The resource purpose is quality questions and answers collection based on the experience of each community. stackexchange.com questions and answers website is inherently dynamic source of knowledge. As the community’s update information daily, at the moment the website can be considered as a wealth of actual knowledge.

Interest in structuring information and obtaining the overall picture may occur in many cases:

- As a manufacturer of bicycles components I do marketing research: what issues are the most important for bikers and what deficiencies in components are discussed by them. I want to use it to improve the products or as a base for the advertising company.

- I want to write a book on a particular subject, for instance Judaism. In addition to the literature analysis I’m wondering what questions are in the focus of readers’ attention to answer them in my book.

- I am a journalist of thematic web resource. I want to know the relevant issues and write useful review articles to attract a lot of attention and increase my web resource popularity.

Platforms and technologies

Big data analyses: Apache Hadoop, Apache Mahout (machine learning library)

Cloud services: Amazon Elastic Map Reduce, Amazon S3, Amazon EC2 (for databases and web applications)

Databases: Neo4j graph database, MongoDB cluster

Java and Web technologies: core Java, Map-Reduce API, Struts 2, AngularJS, D3.js visualization library, HTML, CSS, Twitter bootstrap

Unit-testing: JUnit, MRUnit, Approval tests for Map-Reduce

Architecture of platform

Data calculations

Stackexchange.com dump is data source. For data processing the computers cluster is used. In dependence of data amount the machines number can be changed. The system has been deployed in the Amazon cloud. Amazon Elastic Map Reduce service providing Hadoop clusters on demand was used.

HDFS distributed file system was installed on computers. This system allows to store large files simultaneously on several machines and to make data replication. Apache Hadoop framework deployed on the same computers with HDFS allows to run distributed computing in cluster, using data locality. Over the cluster of computers and Apache Hadoop framework the application for data analysis is installed. It contains the implemented algorithms of data preparation, processing and analysis. The application uses Apache Mahout machine learning algorithms library.

For all stachexchange.com websites data is presented in a single XML-format: questions, answers, comments, user data, ratings, etc.

Algorithm for stackexchange.com data processing and analysis:

- Data preparation for analysis.

- Text conversion to the vector form.

- Questions clustering.

- Results interpretation.

Data preparation

The text preprocessing algorithm:

- Separated by space tokens (words) extraction from the text.

- Bringing text to lowercase.

- Deletion of frequently used meaningless words such as: “what”, “where”, “how”, “when”, “why”, “which”, “were”, “find”, “myself”, “these”, “know”, “anybody”…

- Deleting words which are outside the frame length.

- Words editing: the initial form, without endings, cases, suffixes and so on. For words editing it is convenient to use Porter Stemmer algorithm.

Clustering

Clustering or uncontrolled classification of text documents is the process of text documents regimentation based on content similarity. Groups should be determined automatically. Traditionally as the document model the linear vector space is used. Each document is represented as a vector, i.e. an array of widely used words. For the calculation for example Euclidean distance could be used. Vector representation is an array of all or most frequently used words in the document or n-grams, i.e. sequences of several words. For vectorization TF-IDF algorithm was chosen, as it takes into account the words frequency of the entire data set, reduces the weight of often used words and conversely increase the weight of unique words for each question.

Distributed version of K-means clustering algorithm:

- Select “k” random points as cluster centers.

- The data is evenly distributed over the computing cluster machines.

- Each machine iteratively analyzes all objects and calculates the distance to the nearest cluster center. As the output the object cluster pairs are recorded.

- The objects are grouped by newly assigned clusters and are redistributed on the computers.

- Each machine iteratively analyzes the object cluster pairs and recalculates the cluster centers.

- The offset of the new cluster center relative to the previous one is calculated.

- The algorithm is repeated until the clusters offset has become less than predetermined value or until the predetermined number of iterations has been executed.

Data storage

The analysis results are stored in several databases: Neo4j graph-oriented database and document-oriented MongoDB. It allows effectively store the objects structure and interrelations in the graph form, while the objects content (questions texts) is stored in the form of documents.

Database servers have been deployed in Amazon cloud, Amazon EC2 service was used.

Data migration and visualization

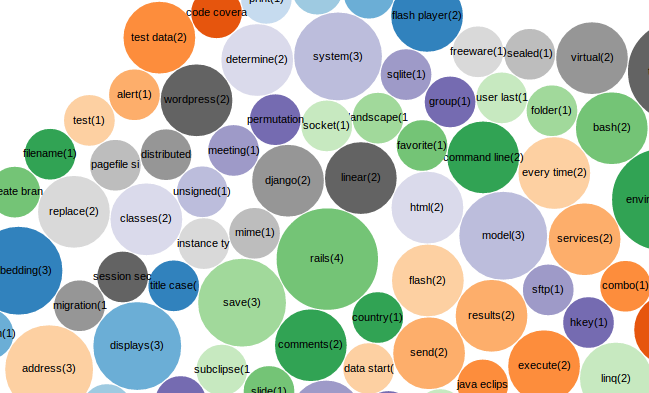

Web application displays results in the form of charts, graphs and diagrams. While migration server moves data in HDFS. After data processing the results are stored in HDFS. There is a special import utility that add the results to the databases and is run over Map-Reduce.

During the system operation the data is updated. With each update there is no need to retransfer all the data in HDFS and rerun expensive computation. To solve this problem there is Redis. It is a database operating in memory with high speed. The migration server copies the information system data and stores the hash key of each record. During reloading data in HDFS the migration server makes a request to the database operating in memory and checks whether the current record was loaded earlier.

To display the results web-application was implemented. Application server part is implemented in java and makes requests to the graph-oriented and document-oriented databases. The client part is implemented using JavaScript (AngularJS, JQuery) technologies and HTML, CSS, Twitter bootstrap.

Amounts of data processed by the system

| Object | 7z archive volume | The unpacked data volume |

| Dump of all data from stackexchange.com website | ~15,7 GB | ~448 GB |

| stackoverflow.com website data | ~12,1 GB | ~345 GB |

| serverfault.com website data | ~303 MB | ~8,6 GB |

Project result

Developed platform allows to analyse the data of stackexchagne.com website and gives the overall picture of this website content. The system collects and downloads initial data, prepares data for processing, clustering and also stores and interprets the results. In the result of system development the following tasks were solved: initial data collection and loading, data storage in the several machines, as well as fault protection in the case of one of hard drives failure, data preparing for processing with use of filtering, vectoring, data processing on the cluster of several machines, results storage and interpretation. Amazon cloud services using provides the great advantage since these services eliminate the need to configure the whole infrastructure manually.

Company’s achievements during the project:

- Apache Mahout machine learning library has been mastered and implemented.

- Neo4j graph-oriented database was successfully implemented.

- A series of Map-Reduce tasks for data preprocessing was developed.

- The optimal coefficients for machine learning algorithms were selected.